In the following article we will attempt to build an open source file sharing app which is password protected and can be used by multiple users. This is a fairly common project, so the challenge with it will be to delivery quality at a faster pace, with the help of AI agents.

AI has started a new era in development and many think that it's the end of programmer jobs. Contrary to that, I think that after an adjustment period, this craft will bloom in a different form that how we know it now.

We will use AI and start with defining as many aspects as possible about or project, starting from file structure and going more and more in depth. Since versioning it one of the most important aspects, we will start our project with a new folder and a new git repo:

mkdir open-file-sharingcd open-file-sharinggit init

FYI: this is not intended to be a comparison between AI providers, but treat AI as a whole with helping in building the project. Thus, multiple runs with each of them will be done without mentioning the exact result, but the one which was the best.

Also, this is my first project where I step back and let the AI agents do most of the work, at least initially. I am very curious to see the outcome and draw up some conclusions. 🙂

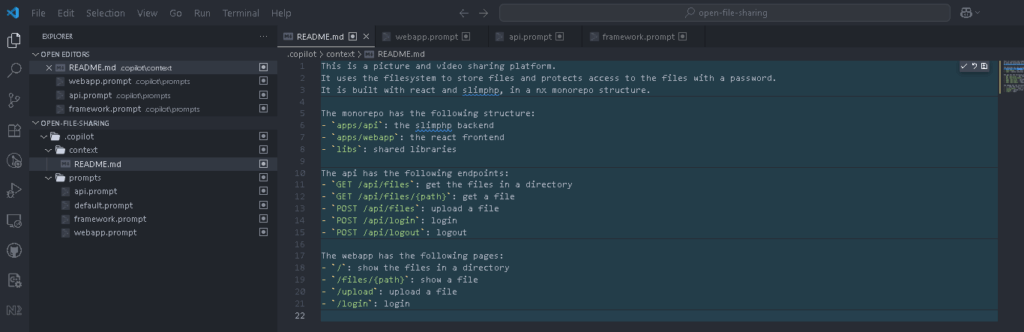

As far as the prompts go, I noticed that the results are slightly better when you clearly specify to the AI that you want it to take into consideration the whole project. Also, as now "please" is not recommended anymore because it's uselessly using energy, I did not specify the "You are an expert developer" whatever phrase.

The git repository for the created application is available here:

Instructions

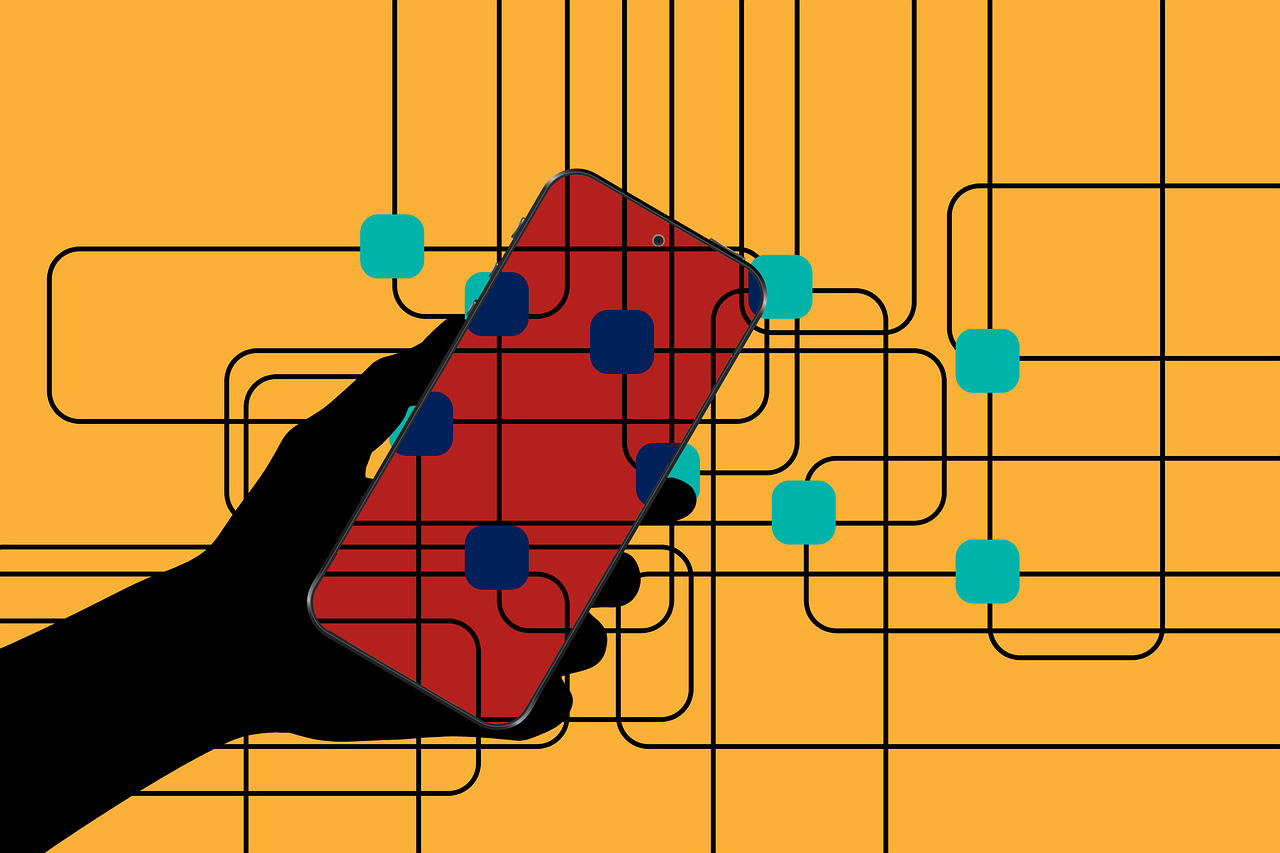

We will use the ./.copilot folder to store most of the information about the project. Instead of starting from scratch, we will write the first prompt. The prompt is pretty close to the general idea, but if you want to improve it you can make that your first query to the AI with something like "Improve this first requirement for the project".

I want to create a picture and video sharing platform which uses the filesystem to store files and protects access to the files with a password. we will use react and slimphp, in a nx monorepo structure. for this, generate the contents of the .copilot folder which will help ai agents generate the right code.

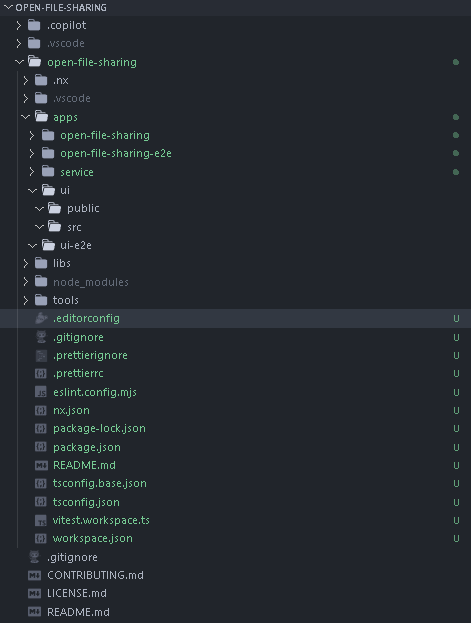

Starting here, after multiple prompts in multiple AI providers combined with manual editing, we got to the following structure:

.copilot/

|-- backend.md

|-- frontend.md

|-- infrastructure.md

|-- monorepo.md

|-- project.md

.gitignore

CONTRIBUTING.md

LICENSE.md

README.mdEach of these files goes into detail with how that aspect of the application will be handled.

project.md

This is the main file where we describe our project for the AI. We can also use these files for context to humans, since the details and the structure provide a lot of information.

# Project Overview

> We are building a secure picture and video sharing platform with password protection.

## Architecture

This project is structured as a full-stack application within an Nx monorepo, facilitating code sharing and organized development between the frontend and backend services.

### Core Technology Stack

- `Frontend`: React 18+ with TypeScript and TailwindCSS

- `Backend`: SlimPHP 4 with PHP 8.1+

- `Structure`: Nx Monorepo for efficient code organization

- `Storage`: File system-based storage with secure access control

- `Security`: JWT-based authentication and password protection

- `Infrastructure`: Docker/Podman with compose files for consistent environments

- `API Definition`: OpenAPI/Swagger for type-safe API contracts

## Features

### Contracts

- Create a clear contract between what we implement in frontend and backend

- The contract will be defined with openapi

- Create scripts to generate the the PHP DTOs and Typescript types

### Authentication

- Use file based storage for the users who can access the project

- Create a password protected mechanism for certain routes of the app, both frontend and backend

### Files

- File upload, download, and delete in a password protected area

- The frontend should show a progress bar for the uploads

- Drag and drop should be possible

- Photos and videos will be uploaded

## Directory Structure

The monorepo should follow a standard Nx layout:

```bash

repo-root/

| apps/ # Contains the main applications

| |-- service # The SlimPHP application

| |-- ui # The React application

| |-- ui-e2e # End to end testing application

| libs/ # Shared libraries and utilities

| |-- shared-types/ # Shared contracts package

| | |-- openapi.yaml # API spec (source of truth)

| | |-- package.json # so React can import TS types

| | |-- composer.json # so PHP can load schema/contracts

| | |-- generated/

| | | |-- php/ # generated DTOs for Slim

| | | |-- ts/ # generated types for React

| | |-- README.md # instructions and information about the contracts

| |-- utils/ # Utils shared between apps

| |-- testing/ # Common test utilities

| | ...

| tools/ # Shared tools for managing the project

| |-- scripts/ # Build and deployment scripts

| |-- docker/ # Docker configurations

| |-- generators/ # Custom NX generators

| |-- ...

| ...

```

### Frontend

- React 18+ with TypeScript

- TailwindCSS for styling

- React Query for API state

- React Hook Form for forms

- Axios for HTTP requests

- Jest and RTL for testing

```bash

ui/

| src/

│ |-- components/ # Reusable UI components

│ |-- features/ # Feature-specific components

│ |-- hooks/ # Custom React hooks

│ |-- services/ # API services

│ |-- store/ # State management

│ |-- types/ # TypeScript types

│ |-- utils/ # Helper functions

| tests/ # Test files

| public/ # Static assets

```

### Backend

- SlimPHP 4 for lightweight, fast API development

- PHP 8.1+ leveraging modern PHP features

- PSR-7/PSR-15 for HTTP message interfaces and middleware

- JWT for stateless authentication

- PHP-DI for dependency injection

- PHPUnit for testing

- PHP_CodeSniffer for code style enforcement

```bash

service/

| src/

│ |-- Auth/ # Authentication related code

│ |-- Controllers/ # API endpoints

│ |-- Services/ # Business logic

│ |-- Models/ # Data models

│ |-- Middleware/ # Request/Response middleware

│ |-- Utils/ # Helper functions

| tests/ # Test files

| config/ # Configuration files

| public/ # Public entry point

```

## Code Quality

When generating code, always consider the context of the entire project and maintain consistency with the existing codebase. The AI agent should prioritize code generation that aligns with the specified technology stack and adheres to best practices for monorepo development. Focus on creating modular, testable code for both the React and SlimPHP applications.

- Write clean, readable, and maintainable code

- Add comments where necessary to explain complex logic

- Follow best practices for both React and PHP development

- Use security linters

- Regular dependency updates

- Security-focused code reviews

- Keep secrets in .env files

- Use environment variables

- Regular security audits

### Goals for AI Code Generation

- Ensure consistent integration between React frontend and SlimPHP backend

- Use Nx generators and proper workspace configuration for both frontend and backend apps

- Maintain modularity and reusability of code

- Always prefer clarity and readability over brevity

- Use TypeScript types and PHP strict types

- Follow RESTful API conventions

- Keep security in mind (validate inputs, escape outputs)

- Ensure all code snippets are production-ready

- When in doubt, default to modular and scalable solutions

### Security Standards

- Validate all inputs

- Sanitize all outputs

- Use prepared statements for DB queries

- Implement proper CORS policies

- Follow OWASP security guidelines

- Use environment variables for secrets

- Implement rate limiting

- Log security-related events

### Testing Guidelines

- Maintain minimum 80% code coverage

- Critical paths should have 100% coverage

- Write tests for bug fixes to prevent regressions

- Write tests before fixing bugs (TDD for bug fixes)

- Keep tests focused and atomic

- Use meaningful test descriptions

- Follow AAA pattern (Arrange-Act-Assert)

- Mock external dependencies and APIs

### Error Categories

#### Validation Errors

- Invalid input data

- Missing required fields

- Wrong data types

- Business rule violations

#### Authentication Errors

- Invalid credentials

- Expired tokens

- Missing tokens

- Invalid permissions

#### File Operation Errors

- Upload failures

- Storage errors

- File size limits

- Invalid file types

#### Network Errors

- API timeouts

- Connection failures

- Rate limiting

- CORS issues

Code language: PHP (php)Rewrite

After more back and forth with AIs, we got to a new structure which is more clear and more comprehensive:

.copilot/

├── 00-overview.md # Project overview and quick navigation

├── 01-workspace.md # Workspace setup and configuration

├── 02-workflow.md # Development workflow and processes

├── architecture/ # Architecture documentation

│ ├── backend.md # Backend architecture

│ ├── frontend.md # Frontend architecture

│ ├── infrastructure.md # Infrastructure setup

│ └── monorepo.md # Monorepo structure

├── guidelines/ # Development guidelines

│ ├── api-patterns.md # API design patterns

│ ├── code-quality.md # Code quality standards

│ ├── error-handling.md # Error handling patterns

│ ├── performance.md # Performance guidelines

│ ├── security.md # Security guidelines

│ └── testing.md # Testing strategies

└── patterns/ # Design patterns

└── state-management.md # State managementCode language: PHP (php)At this point, the content of the .copilot folder seems quite explanatory, good enough to provide a clear direction for the AIs. So it's time to stop describing and start building.

First prompts

Of course the first prompt after creating the instructions was:

Generate the first version of the app, based on the existing instructions in .copilot.

The output was not so great: it generated something on top of the structure, but after generated a parallel one. When asked to combine them, it made an unusable soup of files.

Thus, the approach needed some tweaks: instead of asking for the whole thing, the new approach will be to ask for granular actions.

nx setup

Just implement

nxas described in the./.copilotfolder instructions in the root of the project and don't do anything else.

The output of this prompt was that it created the project inside another folder:

After multiple retries, I decided that it's time to go manual:

npx nx init

Then we will update the ./nx.json file:

{

"npmScope": "open-file-sharing",

"projects": {

"ui": "apps/ui",

"service": "apps/service",

"libs": "libs",

"tools": "tools"

},

"affected": {

"defaultBase": "master"

},

"installation": {

"version": "21.4.1"

}

}Code language: JSON / JSON with Comments (json)We will continue with creating the project.json files for each of the important parts of our project:

apps/service/project.jsonapps/ui/project.jsonlibs/shared-types/project.json

An example content is:

{

"name": "service",

"sourceRoot": "apps/service/src",

"projectType": "application",

"targets": {

"install": {

"executor": "nx:run-commands",

"options": {

"command": "composer install",

"cwd": "apps/service"

}

}

}

}Code language: JSON / JSON with Comments (json)Create the

project.json files where needed and add their content so that depencencies in all projects and libraries can be installed with a single command, e.g.`npm run install:all`

It seems that the above command will generate a package.json file at the root of our project. This is not ideal, but it's fine in the end, since we need tools like husky anyway. So the install command will be either of the following:

npm run install:allnx run-many --target=install --all

Contracts

The next thing to do is to define the contracts between the frontend and the backend. We will do that in the libs/shared-types library, but only after re-examining the contents of .copilot. At a closer look, some fixes are needed.

Luckily, we have a pretty good separation in our commits so far, so we can easily tend to the history of our git repository. After we create another commit, we will rebase and merge it into the commit which improves the .copilot instructions.

git log --oneline

This will output all the commits:

52f34bc (HEAD -> master) configure nx for each of the apps and add the global install command

9f5241d add minimal nx support

28382aa refine the prompt instructions

3178ef9 first version of the promptsCode language: PHP (php)Currently we don't have a remote set, thus no backup. This is why we should be extra careful when we fiddle with the git repository history, in this case by rebasing:

git rebase -i HEAD~3

Here we will re-arrange the commits and merge the new instructions one into 28382aa refine the prompt instructions.

The next step will be to generate the openapi.yml file:

Taking into consideration the instructions in the

`.copilot`folder, generate openapi spec the content of `libs/shared-types` in match the following structure:libs/ # Shared libraries and utilities

|-- shared-types/ # Shared contracts package

| |-- openapi.yaml # API spec (source of truth)

Next we will ask for helpers to generate the types:

Create helpers and commands to generate types for typescript and DTOs for php to be used in the application as shared contracts. Implement using php / typescript and related packages and aim for the following structure:

libs/ # Shared libraries and utilities

|-- shared-types/ # Shared contracts package

| |-- openapi.yaml # API spec (source of truth)

| |-- package.json # so React can import TS types

| |-- composer.json # so PHP can load schema/contracts

| |-- generated/

| | |-- php/ # generated DTOs for Slim

| | |-- ts/ # generated types for React

| |-- README.md # instructions and information about the contractsAdd the generate command for both types in the package.json to be able to run it at the root of the project.

The prompt went surprisingly well with very little back and forth and small fixes for the generated commands.

Infrastructure and base apps

We will continue with generating the docker infrastructure, which goes hand in hand with the minimal setups for the applications.

After we have the basic functionality, which will be one route in the backend, one API call for it in the frontend and containerization support, we will make small improvements which are needed for easier development later.

This last part will be done mostly manually, to save some time while the AI is trying to guess what we want. Sure, in theory if the prompt is good enough, they will provide what we want, but.. that could be the same work as actually doing the work by hand. 🙂

Infrastructure

Taking into consideration the instructions in the

.copilotfolder, generate container definitions and docker-compose configuration which is also compatible with podman. Use .env.example to store the development variables and add .env to the root .gitignore. Only make an implementation for the development environment and use bind mounts for volumes.

Basic backend

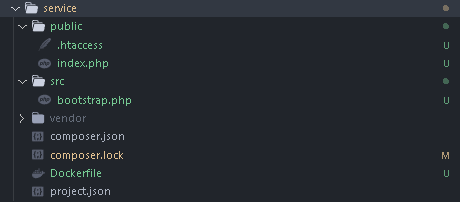

Based on the current contents of the .copilot folder and the current state of the project, generate a minimal implementation of the backend application which only provides a /hello/world api endpoint. The rest of the features will be added in future steps.

The generated code was fine, but some small shortcomings had to be fixed.

The .htacces file was missing, so I just got it from another project instead of asking to generate it:

RewriteEngine On

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_FILENAME} !-d

RewriteRule ^ index.php [QSA,L]Code language: Apache (apache)Also, I updated the definition of the backend in the docker-compose.yml to this:

backend:

build:

context: ./apps/service

volumes:

- ./apps/service:/app

- ./apps/service/public:/var/www/html

- /app/vendor

ports:

- "8080:80"Code language: PHP (php)The final step to have correct path rewrite was to update the imports in the index.php file, by adding the src/:

require __DIR__ . '/../src/vendor/autoload.php';

The current implementation does not have a storage/ folder, which generated an error while building the container which lead to several retries from copilot to fix the issue which were unsuccessful. In the end, I stopped the loop and fixed the issue manually by changing the content of the Dockerfile.

Basic frontend

Based on the current contents of the .copilot folder and the current state of the project, generate a minimal implementation of the frontend application which only consumes the /hello/world api endpoint and shows the result on the page. The rest of the features will be added in future steps.

The prompt did a pretty good job with generating a basic app which consumes that endpoint. However, small tweaks are needed here, as well.

The first thing we need is to add node types to the project:

npm i --save-dev @types/node

Since we are doing changes in the configuration, maybe it's the right moment to update the vite.config.ts file:

import { defineConfig } from "vite";

import react from "@vitejs/plugin-react";

import path from "path";

// https://vitejs.dev/config/

export default defineConfig({

plugins: [react()],

resolve: {

alias: {

"@": path.resolve(__dirname, "./src"),

},

},

server: {

host: true,

port: 3001,

watch: {

usePolling: true,

},

},

});Code language: JavaScript (javascript)In the app/ui/src create the file vite-env.d.ts with the following content:

/// <reference types="vite/client" />Code language: HTML, XML (xml)An interesting way to change the server configuration is:

server: {

host: true,

port: 3001,

watch: {

usePolling: true,

},

fs: {

// allow serving files from the monorepo root and shared libs

allow: [

path.resolve(__dirname, ".."),

path.resolve(__dirname, "../../"),

path.resolve(__dirname, "../../libs/"),

],

},

},Code language: JavaScript (javascript)Environment variables

We will separate the .env.example file at the root into an environment file for the frontend and one for the backend. This also means we need to do some cleanup in the docker-compose.yml file.

Features

At this point we have a working status of our project, so we can think about implementing features.

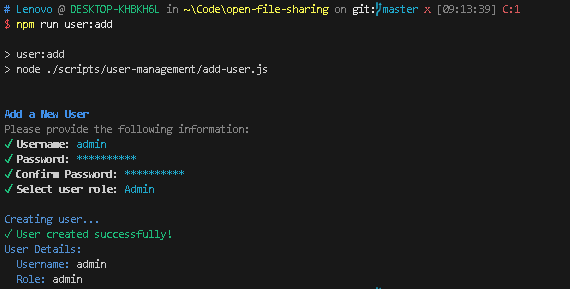

Add users

Following the current implementation and the instructions in

./.copilot, create a tool to add new users. Each user will be on a new line, the characters will be alphanumeric and each of the users will be stored in a new line on this pattern:<username>,<role>,<encrypted password>. Use the backend / service application and add a./apps/service/bin/console.phpand./apps/service/src/Commands/AddUserCommand.phpand make sure to have a corresponding command incomposer.json. Use./apps/service/.data/users.csvto store the information and make sure this is configurable for the situation where we build the app into a different structure. Be aware that we will use the same encryption for the login feature which will be implemented later.

This prompt added new commands in SlimPHP which are used to add users which can be used in the login feature.

Following the current implementation and the instructions in

./.copilot, add a new command in package.json at the root of the project and use packages like @inquirer/prompts, for the interaction with the user to provide the needed information.

This prompt adds another way to create users, which is a more interactive and user friendly one:

Related packages:

Implement login

In the backend service create CORS middleware and add a config.php at the root of the project.

This prompt generated all sorts of changes, as the AI assumed we want tests as well. Together with some small mess about the dependencies used, basically everything was fixed manually at this point. However, the generation of the CORS middleware was pretty good, so that part was pretty much left intact.

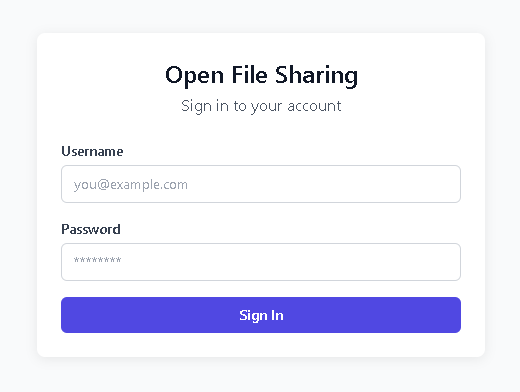

Refactor the frontend application in accordance with .copilot and the api, implement basic stying according to the requirements and create the login form.

Opposed from the situation with the backend, the frontend has been generated very-very well, with good practices and a clear structure.

Following the current implementation and the instructions in

./.copilot, implement the login route in the backend application and make sure both frontend and backend use the same types and implement the contract between them properly.

The implementation of this prompt was almost spot on again. It's was missing the DTOs in the backend part, but to be honest, at this point I am not sure of what needs be done either. 🙂

Following the current implementation and the instructions in

./.copilot, change the backend application to use the DTOs generated in the shared-types library, both for the login route and for the add user command.

Apparently, it is quite tricky to use a library if the context of the apps is inside the app folder. Thus, with these changes we will move the docker configuration to the tools folder of our monorepo.

Update the frontend and the backend to use this new user DTO stored in

./libs/shared-types.

The above prompt was sent after manually changing the User DTO in the openapi.yaml file:

User:

type: object

properties:

id:

type: string

format: uuid

username:

type: string

password:

type: string

format: password

role:

type: string

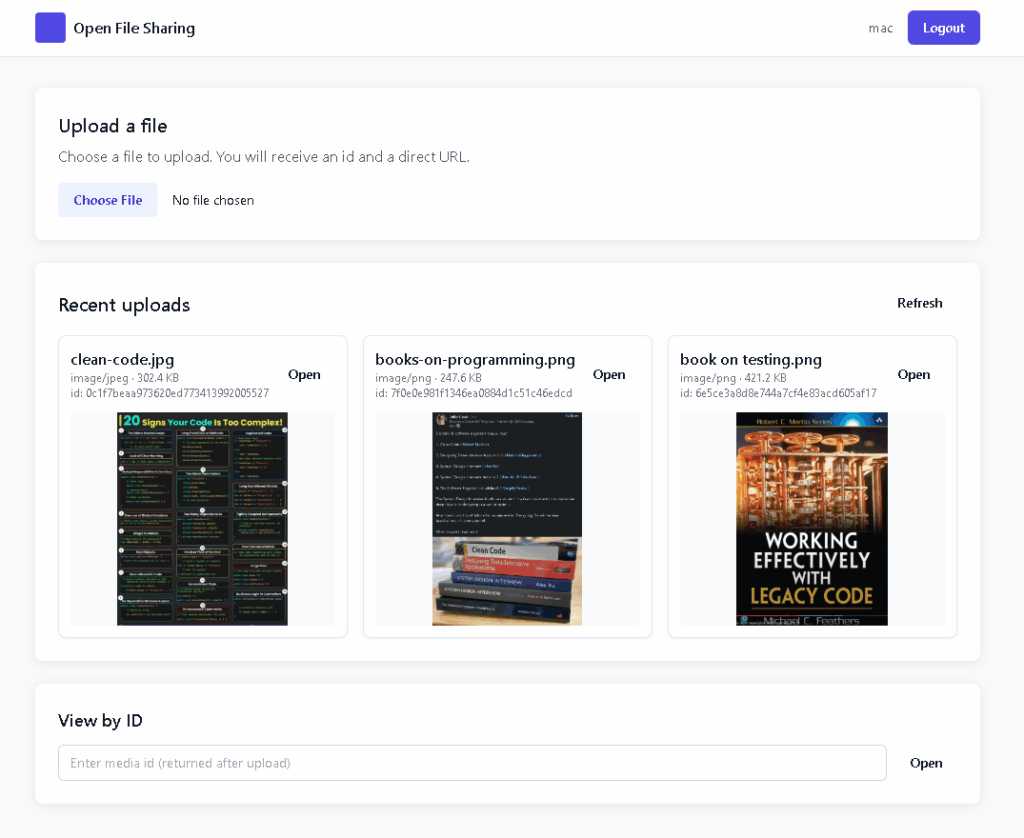

enum: [user, admin]Code language: CSS (css)After some more fixes and cleanups, the application looks pretty good and also provides the expected functionality:

Following the current implementation and the instructions in ./.copilot, update the application to use the types from libs/shared-types in the media implementation, both in backend and frontend.

Upload files

In config.php and config.example.php, add a new prop under app called storage which will be the base for the uploads and also the users.csv file. To avoid duplicating code between commands and routes, please create a util which returns the storage path.

The utils generated and the way it was used in the app was pretty good out of the box.

Following the current implementation and the instructions in

./.copilot, 1) create middleware to protect the upload endpoint in the backend. 2) Then create a new service with the endpoint to upload new media. 3) Also, implement the endpoint which retrieves the all the uploaded media as a list in the new service.

Following the current implementation and the instructions in

./.copilot, create the frontend components and consume the new endpoints: the upload endpoint and the list endpoint.

After some back and forth, the results of the above prompts were pretty impressive:

List and delete users

In order to manage the users list we will create cli helpers. Since this is not an important feature, we will use very weak prompts, to see what the results will be.

Implement the list all users command in both service and tools/user-management.

Implement user deletion by selecting a user from the list. Make the selection as the selection from the

user-management/index.js.

Surprisingly, the output generated was flawless.

Implement pagination for media

The pagination has been defined in the openapi.yaml, but hasn't yet been implemented:

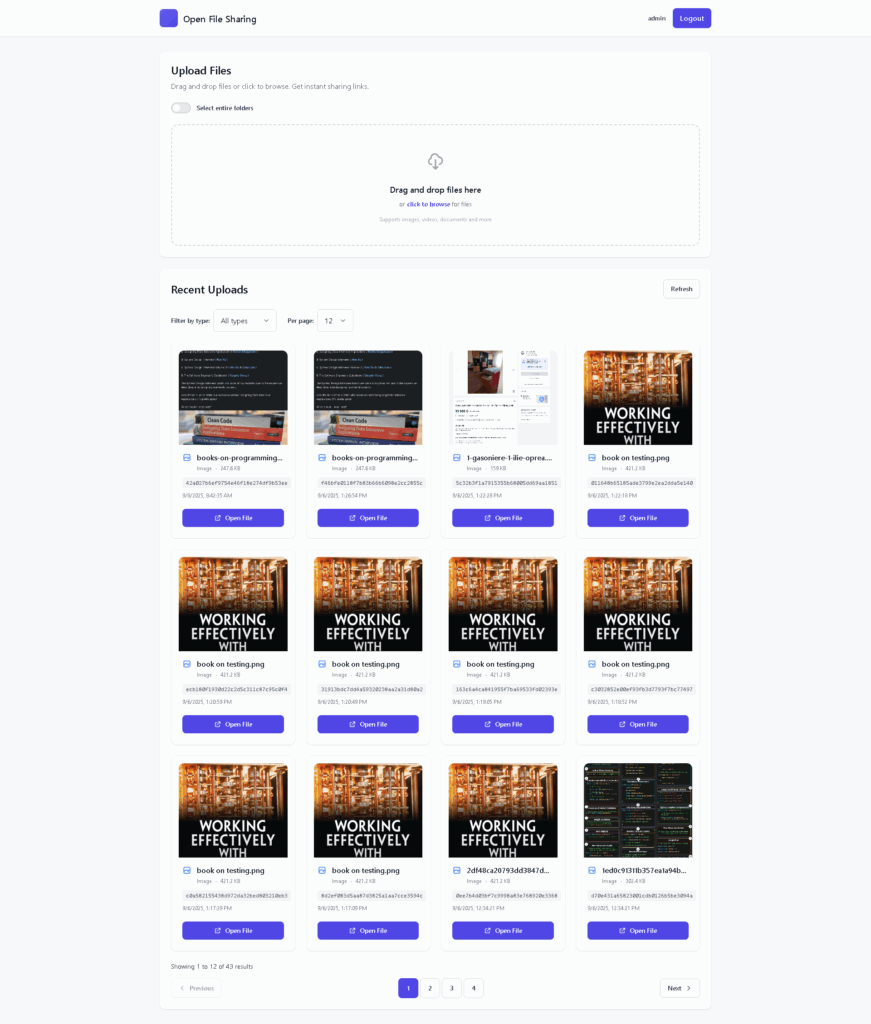

Following the current implementation and the instructions in ./.copilot, implement pagination, both in the backend and in the frontend.

Improve the types

We don't currently use much of the types in the shared-types library. That is why we will start with the following prompt and follow it until the implementation is overall improved.

Following the current implementation and the instructions in ./.copilot, update the application to use the types from libs/shared-types in the media implementation, both in backend and frontend.

Create a backup zip tool

This tool actually is intended to be a collector of photos, videos and other files, so we also want something to help us generate archives.

Taking into consideration the intructions in copilot and looking at the current state of the project, implement a command which creates a zip file of all the files or of certain types of files. Make the necessary changes to the backend

Dockerfile.

Make sure to use the backend .data folder to store the zip files and include the date in the name of the generated zip.

Create the tools/create-zip cli counterpart for running the command at the root of the project.

After some back and forth and small manual changes, the tool to create backups was ready to use. Further improvements like a scheduler or intervals for the files can be added, but at this point they are not essential for our implementation.

Improve uploads

Improve the upload interface by adding react-dropzone to the packages of the ui, but also adding another endpoint for multiple files uploads:

Add

react-dropzoneto the project for friendlier uploads interface. Make any needed adjustments to the backend.

Implement multiple file uploads (directories or simply 2 or more files which are selected or dragged and dropped). Make drag and drop in react-dropzone work with multiple files as well. Remove the single file upload endpoint from both the frontend and the backend.

An interesting fun fact learnt from the agent was what we can run npm commands within a certain folder in this way:

npm --prefix "C:\Users\Josh\Github\open-file-sharing\apps\ui" install

Improve the UI

Make the web ui mobile first, responsive and modern.

With such a simple prompt, the AI revamped the look to something quite clean, modern and functional. For this situation and these amazing results, maybe it's fair to mention the name of the AI: claude 4 sonnet.

With some manual changes to fix the logo and adding a toggle button for the upload, the app looks completely different now.

Delete files

Implement delete functionality only for the users in the admin group.

Again, I was amazed with how well the implementation was done. It fit perfectly into the current form of the project, which was rather unexpected for me.

Implement toasts

Implement

react-toastifyfor the messages from the backend.

The install went fine, but here some optimizations were needed. One was that the position where the toast was imported was not the best one: instead of App.tsx, we put it in main.tsx.

Another was that the response from the backend was not used as a message in the frontend. Sure, we should pay attention at the level of detail we show in the frontend, but in our small application it makes sense just to go with the message returned.

Refactor the backend service

Implement controllers in the service application, so that the routes be more organized and easier to read for humans.

This change clearly helped with making the routes app more readable. Now we can easily form a representation of what the application provides.

Create a common base for all the commands in the backend.

Making this change reduced the code needed in each of the commands and helped with creating a more standardized approach.

Improve accesibility

I admit I got lazy with the two prompts above. 🙂 Without changing that, I thought to compare the output of a "lazy prompt" with one improved with AI.

Implement WCAG standards, make sure that the app is accessibility compliant.

The output of this prompt was pretty good, with obvious improvements towards better accessibility. The version of it enriched by ChatGPT:

Ensure the application fully complies with WCAG 2.2 AA accessibility standards. Implement semantic HTML, proper ARIA attributes where necessary, sufficient color contrast, keyboard navigability, focus management, and support for screen readers. Test with automated tools (e.g., axe, Lighthouse) and manual checks to validate accessibility compliance.

The output was better, the main change being that it added a way to test the accessibility compliance. With the new solution, we can now run new commands like:

npm --prefix "C:\Users\Lenovo\Code\open-file-sharing\apps\ui" run test:accessibility

Small tweaks were needed this time, but all in all it was worth using the new prompt.

Conclusions

The development of this project, in this manner took around 3 days. I am not sure of the costs for running the AI agents, I will pay attention to those in the future, but as far as the cost for the developer went, it was much-much lower.

Everything was very fast and the results are pretty close to what was needed. But we should be aware that throughout the development, the AI agents / AI answers were a bit off topic and manually correcting them or re-running them for the better results was an essential contribution of the human developer. Thus, time and running costs were saved in this manner.

Had I started this project manually, everything would have been generally slower, but pretty much on point. The absolute down side would have been writing the documentation manually, as opposed to generating it which was accurate and a breeze.

As far as tech choices go, React was pretty good, as it is a popular library. But SlimPHP is not so widely adopted and this could have been a pain point for the AI which led to the lower quality responses.

Looking back, I will definitely continue with using AI as it's an incredible tool. But probably I will still do the initial setup of a project manually and ask for smaller features from it or continue to use its amazing autocomplete power. And even if the start was a bit slower, once the basic application was in place, the prompts started generating pretty good quality code.

In hindsight, it might have been good to write down which AI has performed better at which point of the project. I noticed that some of them performed better, but I did not pay much attention at the stage of the project. Thus, the conclusions are about AI usage in coding taken as a "soup".

In this context, even if I continued to pay attention at what it generated and reviewed the output, the general feel over the project was that I was a bit disconnected from what was being delivered, as in I only understood what was going on, but did not get my hands dirty with doing it. It was a nice feeling, but slightly worrying as this usually comes with a skills cost. But the world of programming is changing and maybe it's not such a bad thing for the developers to change with it, too! 🙂