In the era of everything as a service which is happening at the same time with an active hunt for personal information, hosting yourself some of the services you need seems like quite a good idea.

I am currently hosting some services on a Raspberry Pi. I have covered this a while back, but now time has come for an upgrade on my local services. In short and as a preview for what will come: another Pi and the old one being transformed into a lightweight kubernetes host for whatever personal project I work on. Until then, let's take the local services to the next level.

Read more:

- https://draghici.net/2023/08/07/setting-up-my-new-raspberry-pi/

- https://draghici.net/2025/06/27/raspberry-pi-in-an-argon-neo-5/

Customize the operating system

With all the linux systems that I set up, I usually go though the same steps and make them look pretty similar:

- first is the install of

zshwithantigenfor a nicer interaction with the shell; - then the locales setup follows;

- then we setup the sudoer, together with the firewall and fail2ban;

- sometimes I install webmin as well, for managing the server through a web interface.

All of these steps are documented in other articles, so I won't write them here again. One main difference is that we will not setup the swap partition here.

Read more:

- https://draghici.net/2024/11/29/host-old-apps-in-containers-with-debian-12/

- https://draghici.net/2023/11/15/a-small-hosting-server-with-virtualmin-and-debian-12/

Updates

One important thing we will do is setup automatic updates for the stable versions. We are making a specific mention for this especially because we chose to not install the web management interface:

sudo apt install unattended-upgradessudo dpkg-reconfigure -plow unattended-upgradessudo systemctl enable unattended-upgradessudo systemctl start unattended-upgrades

Read more:

SSH Keys

Since we are using the Raspbian set via the GUI provided, we already have the ssh access set up. However, we might need to access other services from our Pi, where some ssh keys will come in handy:

sudo apt install openssh-client -yssh-keygenmkdir -p ~/.sshchmod 700 ~/.sshchmod 400 ~/.ssh/id_rsachmod 600 ~/.ssh/authorized_keys

If the defaults are used with the generate command, you can use the following to view your public key:

cat ~/.ssh/id_rsa.pub

Read more:

- https://draghici.net/2024/11/29/host-old-apps-in-containers-with-debian-12/

- https://www.hostinger.com/tutorials/how-to-set-up-ssh-keys

Storage

The new Pi has internal storage with an M2, but we still want it to automatically recognize and mount usb devices, as we will probably need extended storage.

sudo apt install udevil smartmontools autofs -ylsblk -o NAME,FSTYPE,TYPE,SIZE,MOUNTPOINT,UUID

The output should be:

NAME FSTYPE TYPE SIZE MOUNTPOINT UUID

sda disk 115.5G

└─sda1 vfat part 115.4G A1BE-41D0

sdb disk 115.5G

└─sdb1 vfat part 115.4G 2BC3-4A1E

nvme0n1 disk 1.9T

├─nvme0n1p1 vfat part 512M /boot/firmware 7516-A9B7

└─nvme0n1p2 ext4 part 1.9T / dff-8fcb6dc1709dsudo nano /etc/auto.master- add the following line:

/home/pi/media /etc/auto.usb --timeout=60 --ghost sudo nano /etc/auto.usb- add the following for each of the drives:

<mount-directory-name> -fstype=auto,uid=pi,gid=pi,rw UUID="<uuid-of-the-drive>" sudo systemctl restart autofs.service

The drives will be available in the folders you specify as you try to access them.

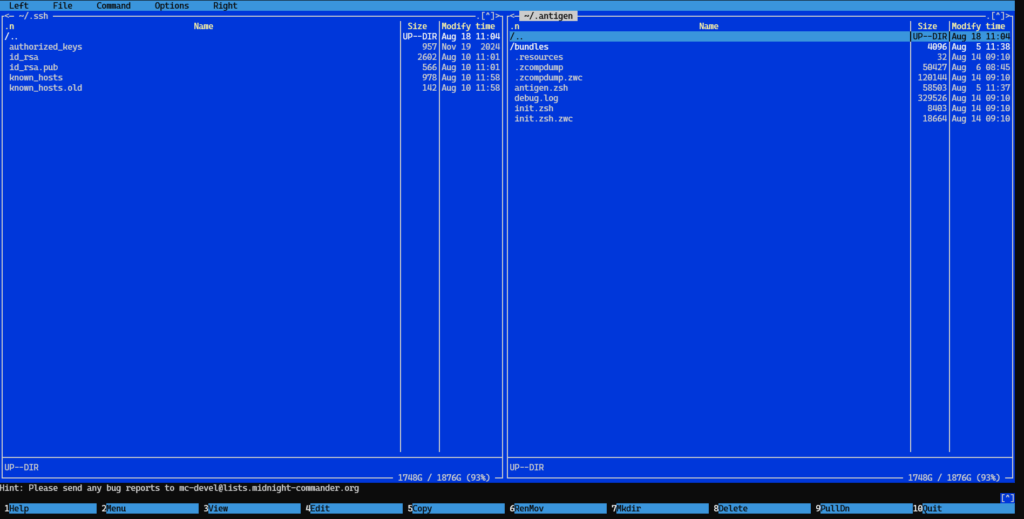

Midnight Commander

A blast from the past for dealing with files is Midnight Commander. It's a fairly accurate copy of Norton Commander, the first file manager I ever used. 🙂

It's easy to install with a good interface, well organized and helps a lot when you feel too lazy to cd in and out of folders.

sudo apt install mc -ymc

Read more:

- https://midnight-commander.org/

- https://en.wikipedia.org/wiki/Norton_Commander#/media/File:Norton_Commander_5.51.png

Podman

For this iteration, we will move towards Podman and away from Docker. They are both similar, mature projects, obviously docker being the heavyweight. However, in light of the license changes of docker, it's nice to be aware of other options as well.

Podman is almost the same thing when you interact with it, with the main advantage that podmad can natively generate yaml files for kubernetes. In this spirit, podman also supports pods, a notion similar to the pods in kubernetes.

Under the hood, though, we find more important changes: podman has a daemonless architecture, which can make it more resource efficient. Basically, with podman each container is a child of the shell process, while with docker they are children of the docker daemon.

After deciding to use podman, installation was a breeze:

sudo apt-get -y install podman podman-compose

Since podman mirrors the docker cli and many commands are defined for docker, you could also implement a symlink on your server to copy pase more easily 😸

sudo ln -s /usr/bin/podman /usr/local/bin/docker

Another way to go is by adding a shell function to your .zshrc (since we are using zsh) file. This has the advantage that you also add a possibility to run the compose with space between the words, instead of the hyphen:

# `podman-compose` shortcut for `podman compose`

podman() {

if [[ "$1" == "compose" ]]; then

shift

podman-compose "$@"

else

command podman "$@"

fi

}

docker() {

if [[ "$1" == "compose" ]]; then

shift

podman-compose "$@"

else

command podman "$@"

fi

}Code language: Bash (bash)To run containers from docker, we also need to setup the docker registry:

sudo nano /etc/containers/registries.conf

Then add the following to the file and save:

[registries.search]

registries = ["docker.io"]Code language: JavaScript (javascript)You should now be able to run:

podman run -d --name my-nginx -p 8080:80 --restart=no nginxwget -qO- http://localhost:8080podman ps

Read more:

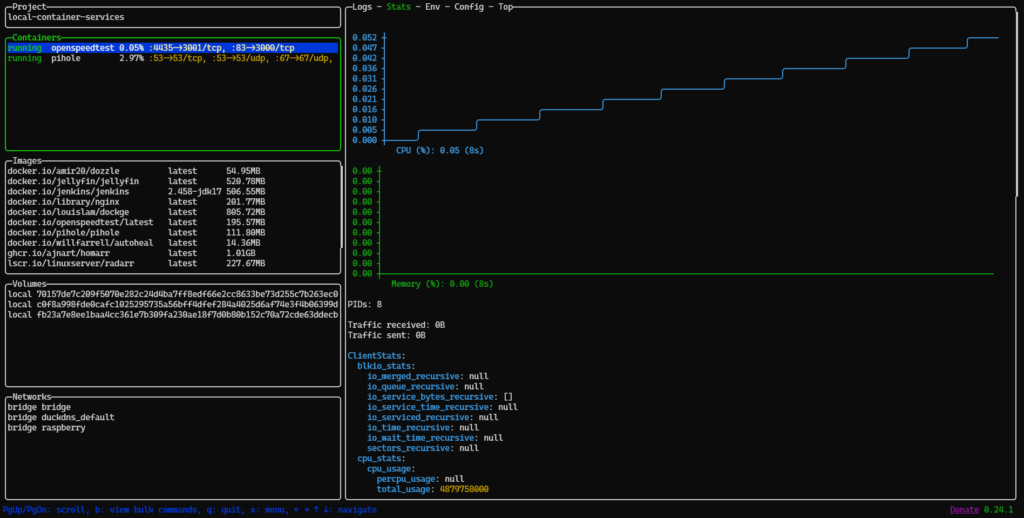

lazydocker

I don't often need to see the containers in an interface from the command line, but for when I do, I got pretty used to lazydocker. To use it with podman, we need to make some tweaks:

systemctl --user enable --now podman.socketexport DOCKER_HOST=unix:///run/user/$UID/podman/podman.socklazydocker

To make the DOCKER_HOST change permanent, we can add the export to ./zshrc file or to the config file of whichever shell you are using.

Read more:

- https://podman-desktop.io/docs/migrating-from-docker/using-the-docker_host-environment-variable

- https://stelfox.net/blog/2023/05/podman-socket-compatibility/

Containers

As far as the containers go, they will fall under a couple of possible roles: 1) support containers which are needed to run the services we want to use, and 2) the services themselves. However, in the file structure we will not differentiate between these roles.

Before we start any containers, we need to setup the network. Please note that podman does not support the docker network list command, only ls:

[ ! "$(podman network ls | grep raspberry)" ] && podman network create raspberry

We will keep the containers grouped inside the services folder, while the configuration for each of them will be kept close to the container definition.

.data/

services/

|-- pihole

| |-- .gitignore

| |-- .env

| |-- .env.example

| |-- compose.yml

| |-- README.md

|-- jenkins

| |-- .data/

| |-- compose.yml

| |-- ...

| ...

.autoload

.env

.env.example

.gitignore

autoload-services.sh

manage-services.sh

README.md

...When defining the services, we will keep the following in mind:

- For simplicity, each server which needs env variables will manage this inside a file or inside its compose file;

- We will store data, logs, whatever needs to be persistent inside

~/.data, and will will prefix the data folders used with the name of the service. By exception we allow storing data inside the container folder, if that is relevant for that particular case. We store the data needed for the application to run (e.g. config files) in the application folder; - The default restart policy is

unless-stopped, but we can doalwaysfor essential services likedockge. Since podman does not run as a daemon, this is ignored and use scripts to mimic the behavior ofrestart: alwaysand stopping a container from being restarted; - The

networkfor the services will beraspberry; - Usually, the

container_namewill be the same as the name of the service; - We will keep a central

README.mdfile which will contain information about how to setup the project and other such files in the services where specific information will be stored.

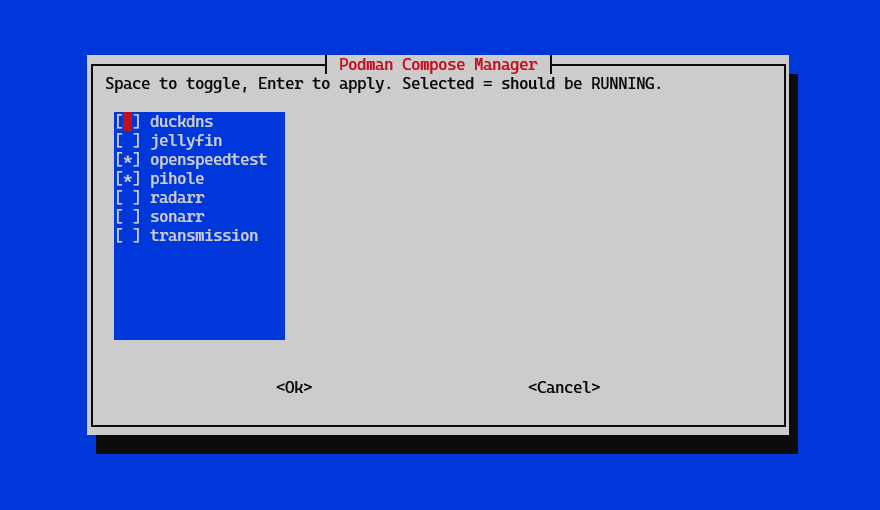

Start and stop

We used to run dockge to start, stop and manage container. Since podman is designed to run without a daemon, we need a different solution. The chosen option was a bash script which creates a menu and based on the selection in the menu it will start and stop.

The interface above is created by:

#!/usr/bin/env bash

# manage-services.sh - checklist for starting/stopping podman compose projects

#

# For each subfolder under SERVICES_DIR that contains a compose file,

# the script will let you toggle ON/OFF (spacebar) and apply (Enter).

set -o pipefail

# Define colors

RED='\e[31m'

BLUE='\e[34m'

NC='\e[0m' # No Color

# Helper function for colored echo

colored_echo() {

local color="$1"

local message="$2"

echo -e "${color}${message}${NC}"

}

# Load the .env file

if [ -f .env ]; then

set -a

source .env

set +a

colored_echo "$BLUE" "Loaded environment variables from .env"

fi

SERVICES_DIR="${CONTAINER_SERVICES_DIR:-services}" # default "services"

ORCHESTRATOR="${CONTAINER_ORCHESTRATOR:-podman}" # default "podman" or "docker"

WHIPTAIL_HEIGHT=20

WHIPTAIL_WIDTH=78

WHIPTAIL_MENU_LINES=12

# check dependencies

if ! command -v "$ORCHESTRATOR" >/dev/null 2>&1; then

colored_echo "$RED" "Error: $ORCHESTRATOR not found in PATH."

exit 2

fi

if ! command -v whiptail >/dev/null 2>&1; then

colored_echo "$RED" "Error: whiptail not found. Install 'whiptail' (Debian/Ubuntu) or 'newt' (Fedora/RHEL)."

exit 2

fi

# helper: get the compose file

get_compose_file() {

local dir="$1"

find "$dir" -maxdepth 1 -type f \( -iname 'docker-compose.yml' -o -iname 'docker-compose.yaml' -o -iname 'compose.yml' -o -iname 'compose.yaml' -o -iname 'podman-compose.yml' -o -iname 'podman-compose.yaml' \) -print -quit

}

# gather service folders that contain a compose file

service_dirs=()

no_compose=()

only_docker=()

while IFS= read -r -d '' d; do

[ -d "$d" ] || continue

compose_file=$(get_compose_file "$d")

# if compose does not exist

if [ -z "$compose_file" ]; then

no_compose+=("$d")

continue

fi

# if podman and /var/run/docker.sock is needed

if [ "$ORCHESTRATOR" = "podman" ] && grep -q "/var/run/docker.sock" "$compose_file"; then

only_docker+=("$d")

continue;

fi

service_dirs+=("$d")

done < <(printf "%s\0" "$SERVICES_DIR"/*/ 2>/dev/null)

if [ ${#service_dirs[@]} -eq 0 ]; then

colored_echo "$RED" "No service directories with compose files found in '$SERVICES_DIR'."

if [ ${#no_compose[@]} -gt 0 ]; then

colored_echo "$RED" "Found directories without compose files:"

for d in "${no_compose[@]}"; do

colored_echo "$RED" " - $(basename "$d")"

done

fi

exit 0

fi

# build whiptail choices

choices=()

for d in "${service_dirs[@]}"; do

name=$(basename "$d")

compose_file=$(get_compose_file "$d")

running_count=$(podman ps --filter "label=com.docker.compose.project=$name" --format "{{.Names}}" 2>/dev/null | wc -l)

if [ "$running_count" -gt 0 ]; then

choices+=("$name" "" ON)

else

choices+=("$name" "" OFF)

fi

done

# show checklist

selected=$(whiptail --title "Podman Compose Manager" \

--checklist "Space to toggle, Enter to apply. Selected = should be RUNNING." \

${WHIPTAIL_HEIGHT} ${WHIPTAIL_WIDTH} ${WHIPTAIL_MENU_LINES} \

"${choices[@]}" \

3>&1 1>&2 2>&3)

# user cancelled?

if [ $? -ne 0 ]; then

colored_echo "$BLUE" "Cancelled."

exit 0

fi

# parse selected string into an array

eval "selected_arr=($selected)"

# helper: check if an element is in selected_arr

in_selected() {

local needle="$1"

for e in "${selected_arr[@]:-}"; do

if [ "$e" = "$needle" ]; then

return 0

fi

done

return 1

}

# helper: stop a service

stop_service() {

local name="$1"

output=$(podman-compose down 2>&1)

if ! echo "$output" | grep -q -i "no such container"; then

echo "$output"

echo ""

fi

}

# apply selection

colored_echo "$BLUE" "Applying selection..."

echo ""

for d in "${service_dirs[@]}"; do

name=$(basename "$d")

pushd "$d" >/dev/null || { colored_echo "$RED" "Failed to cd into $d"; continue; }

if in_selected "$name"; then

colored_echo "$BLUE" "Processing $name..."

echo ""

podman-compose up -d

echo ""

else

stop_service "$name"

fi

popd >/dev/null

done

# write the selected services to a file

echo "${selected_arr[@]}" > ".autoload"

# show any directories that were ignored

if [ ${#no_compose[@]} -gt 0 ]; then

colored_echo "$BLUE" "Ignored directories (no compose file found):"

for d in "${no_compose[@]}"; do

colored_echo "$BLUE" " - $(basename "$d")"

done

echo ""

fi

# show any directories which were skipped

if [ ${#disabled_services[@]} -gt 0 ]; then

colored_echo "$BLUE" "Skipped directories (only usable with docker):"

for d in "${disabled_services[@]}"; do

colored_echo "$BLUE" " - $d"

done

echo ""

fi

colored_echo "$BLUE" "Done."Code language: Bash (bash)Autostart

With podman, restarting containers automatically is a bit trickier than with docker, for the same reason that it does not rely on a daemon. To be able to restart a container like the restart: always policy does, you need to run the following for it:

podman generate systemd --new --files --name myservicesystemctl --user enable podman-myservice.servicesystemctl --user start podman-myservice.service

However, if you choose to run the containers with the start and stop script above, then you can setup only one script to run at each system reboot:

#!/usr/bin/env bash

# autoload-services.sh - script to automatically load services for podman

#

# This script loads the services defined in the .autoload file and starts them.

SERVICES_DIR="${CONTAINER_SERVICES_DIR:-services}"

ORCHESTRATOR="${CONTAINER_ORCHESTRATOR:-podman}"

# Load the .env file

if [ -f .env ]; then

set -a

source .env

set +a

fi

# Stop if not podman

if [ "$ORCHESTRATOR" != "podman" ]; then

echo "Error: This script is only compatible with Podman."

exit 1

fi

# Load the content of .autoload

if [ -f .autoload ]; then

read -ra services <<< "$(cat .autoload)"

for service in "${services[@]}"; do

pushd "$SERVICES_DIR/$service" >/dev/null || { echo "Failed to cd into $service"; continue; }

podman-compose up -d

popd >/dev/null

done

fi

Code language: Bash (bash)Then you need to run:

sudo bash -c 'cat > /etc/systemd/system/local-container-services.service <<EOF

[Unit]

Description=Autoload Local Container Services

After=network.target

[Service]

Type=oneshot

ExecStart=/bin/bash -c "cd /home/pi/local-container-services && ./autoload-services.sh"

RemainAfterExit=yes

User=pi

WorkingDirectory=/home/pi/local-container-services

[Install]

WantedBy=multi-user.target

EOF'Code language: PHP (php)And finally run systemctl for it:

sudo systemctl daemon-reloadsudo systemctl enable local-container-services.servicesudo systemctl start local-container-services.servicejournalctl -u local-container-services.service -f

OpenSpeedTest

The following setup is for the first container we will run in the current implementation. The container does not need persistent data. We don't really need to modify the environment variables, so that is why we specify them in the compose file. Another thing to mention is that we use restart: unless-stopped for docker support, but in our podman implementation it will not matter.

The compose.yml file should contain:

services:

speedtest:

container_name: openspeedtest

image: openspeedtest/latest

environment:

- ENABLE_LETSENCRYPT=False

- DOMAIN_NAME=raspy

- USER_EMAIL=my@email.com

- VERIFY_OWNERSHIP="TXT-FILE-CONTENT"

restart: unless-stopped

ports:

- 88:3000

- 89:3001

networks:

- raspy

labels:

- project=local-services

networks:

raspy:

external: trueCode language: PHP (php)Read more: