I recently worked on a project where I used prompts to generate most of the code. I had to make some fixes, as expected, but the question arose: might more of those have been avoided if the quality of the prompts had been higher?

AI is obviously changing the was we use programming languages and we probably will never go back to coding without it. It's often doing an amazing job, but it's not yet close to removing the human developers from the loop.

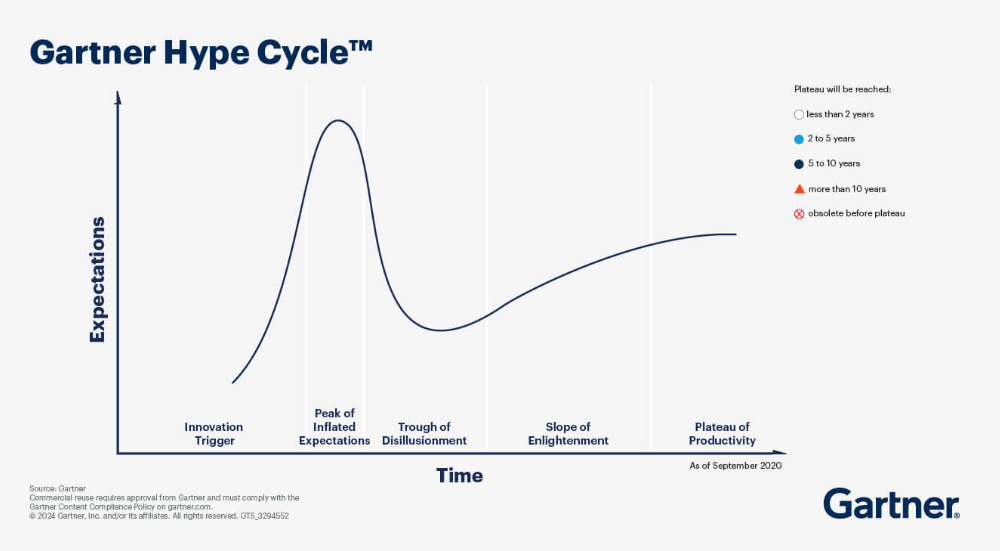

Thus, thinking of the Gartner hype cycle, I think we are at the peak of inflated expectations. This is good because we are one step closer to actually understanding this technology and thus, using it in the most efficient way.

More info:

- https://www.gartner.com/en/articles/hype-cycle-for-artificial-intelligence

- https://youtu.be/QX1Xwzm9yHY

- https://roadmap.sh/prompt-engineering

Purpose

An important element in using AI is creating a good prompt. Since the the current AI is basically an autocomplete on super-steroids, we should create requests (prompts) with context tuned for the best results.

The Open File Sharing project does not have tests yet, so we will use such improved prompts to generate e2e tests. Maybe we will add some unit tests, though we are not necessarily interested in having coverage. Realistically, this is a project which won't see production anytime soon 🙂

Read more:

- https://draghici.net/2025/08/29/accelerated-ai-development-open-file-sharing/

- https://github.com/cristidraghici/open-file-sharing

Introduction to AI

Artificial Intelligence in the form of algorithms has been present in our lives for many-many years already. Youtube suggestions, netflix predictions, social networks news feeds are rather recent examples, believe it or not. In recent history, algorithms have been present in travel (e.g. airline booking systems), finance (e.g. credit scores and credit processing), telecom (e.g. call routing), entertainment (e.g. game ai), consumer electronics (e.g. calculators), heathcare (e.g. MRI imaging), military (e.g. missile guiding systems) and so on.

Generative AI also started many years ago, but the turn to what we use new has happened in 2017 with Google's Transformer Architecture based on the paper "Attention is All You Need". In 2018 OpenAI launched GPT-1. The change it introduces is that in can identify and classify inputs, together with generating original content (though the latter can be debatable). To the day, this technology was pushed forward with Reinforcement learning with human feedback (RLHF), diffusion models and zero-shot learning.

A large language model (LLM) is a language model able to predict the next token, for this having been trained with self-supervised machine learning on large volumes of human input. The ability to predict the next token together with the right constraints leads to all the benefits we have been accustomed in the past few years of using this technology.

Such models include GPT-4, LLaMA and many others. Hugging Face is a good index for many AI models. Other types of models are: text to image, text to video, text to action and more.

Read more:

- https://huggingface.co/

- https://en.wikipedia.org/wiki/Artificial_intelligence

- https://en.wikipedia.org/wiki/Attention_Is_All_You_Need

- https://en.wikipedia.org/wiki/Large_language_model

- https://youtu.be/fkIvmfqX-t0

Prompts and prompting

A prompt is a natural language input given to an AI model where the user tells the model what to do. In other words, the prompt is the method used by the user to interact with the model. As mentioned above, we should always keep in mind that AI models are prediction engines, thus we should be as specific and detailed as possible when creating our queries. This will help with getting a good result and avoiding hallucinations.

It's mandatory that a prompt be an instruction or a question, but we can divide the data inside a query in the following parts:

- Instruction: "Create a 300 word description of Bucharest"

- Question: "What are some good examples of facts to mention about Bucharest?"

- Input data: "Cristian is a Romanian web developer born and raised in Bucharest who is curious to describe his city in the most efficient way to somebody who has never been here. Write a 300 word description of Bucharest for Cristian."

- Examples: "The Houses of Parliament, the Victory Square and the Botanical Garden are good objectives in Bucharest. The Dristor area, the Izvor Park and the Otopeni train station are not good objectives. Write a 300 word description of Bucharest taking these examples into consideration."

Read more:

Prompt engineering

Prompt engineering is a process though which the design of the optimal prompt is achieved, given the generative model and a goal.

It's important that the user has some domain knowledge, to be able to articulate the goal of the prompt together with determining whether the output is good or bad. It's also important that the user has a general ideal of the capabilities of the model used, as each model is trained differently, thus will output results of different quality. To improve efficiency, one might also create and manage templates. It's important to remember that designing a prompt is iterative (so multiple tries will probably be needed to achieve the desired outcome). Another thing to keep in mind is that comparable to the nowadays common IT deliverables, prompts might require version control, QA and regression testing.

More info:

Advanced prompts

We say about the answer of a model that it is sotchastic. This means that it is always randomly determined, thus running the same prompt in the same model with the same parameters can return a different result each time.

To reduce this fluctuation, one has different steps which can be taken:

- check the model's parameters (e.g. reduce the temperature to make the model more conservative, thus decrease the creativity);

- increase the level of detail in the prompt;

- use chain of thought prompting, to encourage the AI to be factual and correct by forcing it to follow a series of steps in its "reasoning". e.g.

- What are the best places to visit in Bucharest in 2025 as a tourist from Europe? Use this format:

Q: <repeat_question>

A: Let's think step by step. <give_reasoning>Therefore, the final answer is <final_answer>. - https://chatgpt.com/share/68e20fc6-cdbc-800c-ab26-77ecd375d9c5

- What are the best places to visit in Bucharest in 2025 as a tourist from Europe? Use this format:

- prompt the AI to cite its sources to avoid hallucinations. It can improve the results, but it is still not a bulletproof solution. To do this, you can add in your prompt something like: "Answer only using reliable sources and cite those sources";

- use forceful language. e.g. all caps and exclamation marks work, refrain from using please;

- you can define tags (e.g.

<begin>,<end>) to guide the model: e.g.- I want a 300 word story about Bucharest. Between <begin> and <end> I will write the introduction to this story:

<begin>

It was a beautiful autumn day.

</end> - https://chatgpt.com/share/68e3dbff-95d4-800c-9456-19129d5ab82c

- I want a 300 word story about Bucharest. Between <begin> and <end> I will write the introduction to this story:

- enable context between prompts, which enable better results;

- keep in mind that if you use an API, the response of the API might be different with each call the model makes;

- remember GPTs read only forward, thus better to put examples before the request;

- you can use affordances (functions defined in the prompt that the model is explicitly instructed to use when responding).

One important thing to keep in mind is that models are pre-trained on existing data. This means that the information they provide on their own, depending even only on the date when the training was done, can be obsolete or incomplete.

Special tags

Apart from the natural language, we can also use special tags to be more explicit in what we want to express.

| Tag | Example Usage |

|---|---|

| begin_of_text | Marks the start of a text sequence. It tells the model “this is where input begins.” |

| end_of_text | Marks the end of a text sequence. Often used to separate examples or stop generation. |

| endofprompt | Indicates the end of the user’s input or instruction — separates prompt from expected model output. |

| system | Begins a system message, which sets behavior or tone for the assistant. |

| user | Begins a user message. Represents input from the human user. |

| assistant | Begins an assistant message. Marks where the model’s reply should go. |

| im_start | im_end |

| fim_prefix | “Fill-in-the-middle” prefix — marks the text before a missing middle section (used in code completion). |

| fim_middle | Marks where the missing code or text goes (the part the model must fill in). |

| fim_suffix | “Fill-in-the-middle” suffix — text that comes after the missing section. |

| diff_marker | Marks the start of diff-style edits (for example, in code patch generation). |

For example, the <|endofprompt|> instructs the language model to interpret what comes after this statement as a completion task.

"Write a 300 word description of Bucharest as a story. <|endofprompt|>It was a beautiful autumn day in my favorite city"

https://chatgpt.com/share/68e213d7-7ffc-800c-8b91-0773e82180e7

TOON Notation

JSON can be optimized to save tokens in a new format, TOON (Token Oriented Object Notation). It is said that it can optimize up to 60% the token consumption.

So starting from the JSON format:

{

"users": [

{"id": 1, "name": "Alice", "role": "admin"},

{"id": 2, "name": "Bob", "role": "user"}

],

"settings": {

"theme": "dark",

"notifications": true

}

}Code language: JSON / JSON with Comments (json).. we can get to this:

users[2]{id,name,role}:

1,Alice,admin

2,Bob,user

settings:

theme: dark

notifications: trueCode language: JavaScript (javascript)More info:

Presets and reusable prompts

Going back to the idea that that one can use preset prompts, you can organize them into github repositories.

A project which evolved nicely is Fabric:

Read more:

- https://github.com/preset-io/promptimize

- https://raffertyuy.com/raztype/ghcp-custom-prompts-structure/

- https://github.com/jujumilk3/leaked-system-prompts

Models

One very important thing to mention is that the answer you will get from the LLM depends greatly on the model you use to get the answer.

One nice list of models can be found here:

On a more abstract level we can think of the most known providers of models:

- https://chatgpt.com/

- https://gemini.google.com/app

- https://chat.deepseek.com/

- https://www.perplexity.ai/

- https://mistral.ai/

- https://claude.ai/

- https://github.com/copilot/

Security

It's interesting that not so many people talk about the security issues of prompts, namely the prompt injection being one which is a great threat to AI systems.

One important risk that comes to mind is related to using an AI browsers. In this situation, you might end up navigating to a page which contains a prompt injection. As it is ingested by the AI, it might trick it to execute hidden actions on your computer.

Read more:

Conclusion

Remember to think critically and use your brain while asking for responses and reading them. Also, you should pay attention at keeping the usage of the models within the legal and ethical boundaries.

Some general ideas to take away from this article:

- AI can help even for getting better prompts for what you want created, helping you cover points of view you might have missed;

- Having some basic general instructions guidelines about the project can help with keeping the project in the same line;

- One could save the prompts and use them as a base for your future projects, as they will produce pretty close results every time they are run if they are detailed enough;

- MCP with agents is a pretty-pretty efficient way to use AI in your projects.

See more: